Cyber Security Practitioner | Embedded Systems | Full Stack AI Developer

Bridging the gap between Hardware Engineering, Software QA, and AI-First Innovation.

With over 20+ years of industry experience, I don't just test systems; I build them. My journey spans from designing PCBs for ISRO satellite projects to developing modern Full Stack AI Web Apps in 2026. I deliver technology that is robust, secure, and future-proof.

My Core Triad

Hardware test rigs, microcontroller prototypes, and AI-driven Web Applications.

Security threat modelling (STRIDE), embedded diagnostics, and automated defect injection.

Pioneering AI-First testing strategy and Vibe Coding techniques to accelerate development.

My Blog

Latest thoughts and tutorials on security, IoT, and automation.

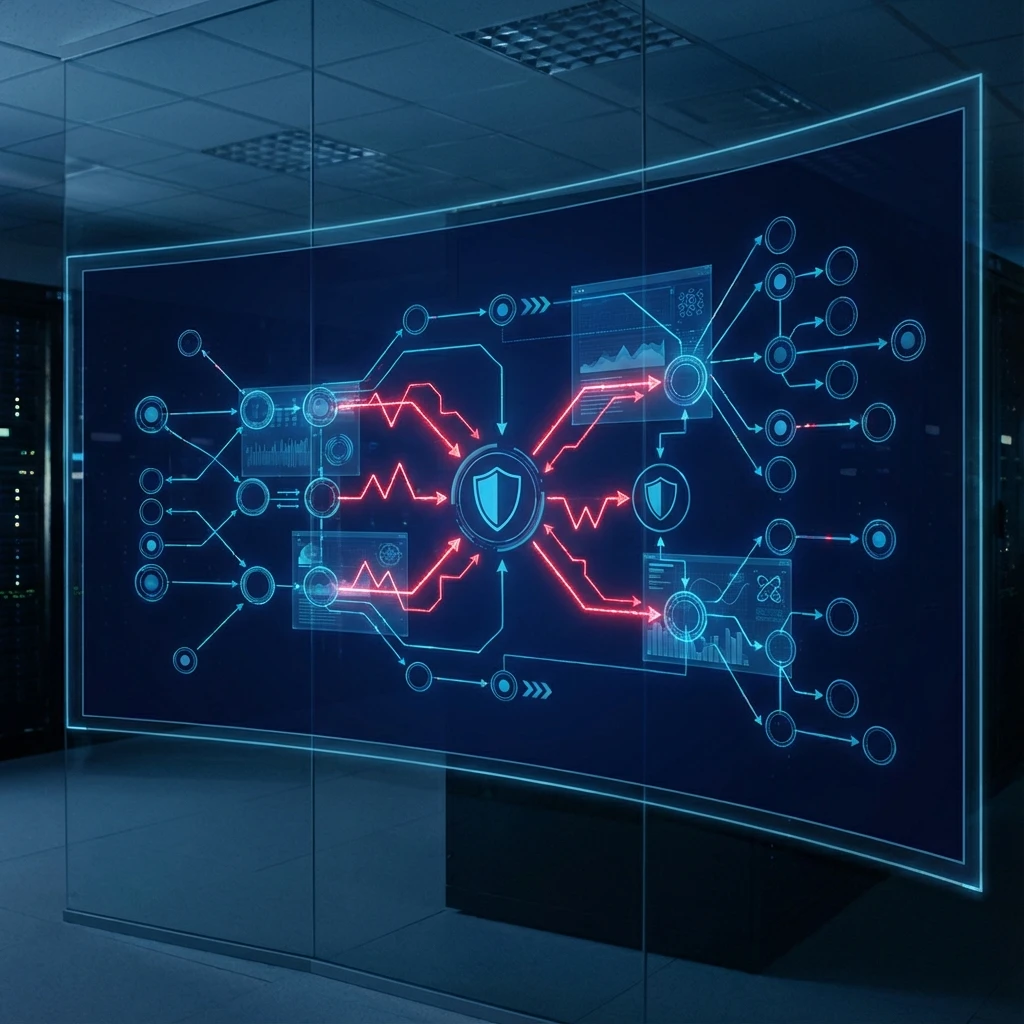

AI-Native Threat Modeling in 2026: From Static Diagrams to Living Risk Maps

Threat modeling used to mean slow workshops and outdated diagrams. In 2026, AI tools analyze your code and architecture in minutes, generating threats, attack trees, and fixes automatically.

AI Security in 2026: When Robots Turn Against Us

December 2025 changed everything. New rules from OWASP help us stop bad AI, and experts say 2026 is when we might see the first big AI hack.

Agentic Warfare: The 2025 Security Recap & 2026 Roadmap

2025 marked the year AI agents went from experimental tools to operational weapons. Here's what happened, what it means, and how to prepare your defenses for 2026.